Introduction: The Voice-First Imperative

Voice search analytics has emerged as the critical differentiator in SEO strategy for 2026, fundamentally reshaping how we understand user intent, optimize content, and measure success. With over 8.4 billion voice-enabled devices now in active use globally, the analytics derived from voice interactions provide unprecedented insights into conversational queries, semantic patterns, and user behavior that traditional search metrics simply cannot capture. This technical evolution demands a complete recalibration of SEO methodologies, moving beyond keyword density and backlink profiles toward sophisticated natural language processing (NLP) alignment and AI assistants optimization.

The analytics revolution isn't merely about tracking voice queries—it's about understanding the intricate layers of context, intent, and conversational flow that define how humans naturally seek information. Picture yourself asking your voice assistant a question right now: the phrasing, the nuance, the expectation of immediate, accurate response—that's the standard your SEO strategy must meet.

The Technical Architecture of Voice Search Analytics

Multi-Dimensional Data Collection Frameworks

Voice search analytics in 2026 operates on sophisticated multi-layer data collection systems that capture far more than query strings. Modern analytics platforms now track:

- Phonetic variations and accent patterns that reveal regional linguistic differences

- Query reformulation sequences showing how users refine voice searches in real-time

- Contextual metadata including time of day, device type, and location coordinates

- Sentiment markers extracted through tone analysis and speech pattern recognition

- Conversation thread continuity tracking follow-up queries within sessions

These data points feed into machine learning models that construct comprehensive user intent profiles. Unlike traditional click-through rates, voice search SEO metrics focus on answer accuracy, response latency, and conversational satisfaction scores—metrics that directly correlate with AI assistant ranking algorithms.

Neural Network Integration for Pattern Recognition

The technical backbone of voice search analytics relies on transformer-based neural networks that process natural language with human-like comprehension. These systems employ:

- Bidirectional Encoder Representations that understand context from both preceding and following words

- Attention mechanisms that weight the importance of different query components

- Entity recognition layers that identify brands, locations, and products within conversational flow

- Intent classification algorithms that categorize queries by commercial, informational, or navigational purpose

For SEO professionals, this means optimizing for semantic clusters rather than individual keywords. The SEO future 2026 demands content that satisfies entire conversational pathways, not isolated search terms.

Conversational Query Optimization: Beyond Keywords

Long-Tail Conversational Patterns

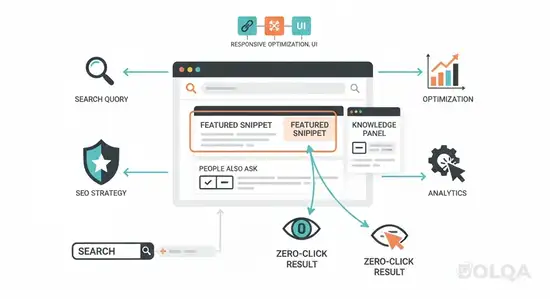

Speech search trends reveal that voice queries average 29 words compared to 3-4 words for typed searches. This dramatic expansion requires a fundamental shift in content architecture. Successful voice search SEO now prioritizes:

- Question-based heading structures that mirror natural speech patterns

- Contextual answer blocks positioned for featured snippet extraction

- Conversational bridging language that connects related concepts fluidly

- Anticipatory content sequencing that addresses likely follow-up questions

Analytics platforms now measure "conversational completion rates"—the percentage of users who receive satisfactory answers without query reformulation. This metric has become the premier indicator of content optimization effectiveness.

Semantic Depth and Entity Relationships

Voice search analytics expose the intricate web of entity relationships that AI assistants use to construct responses. Your content must now explicitly define:

- Primary entity attributes and classifications

- Relationship hierarchies between concepts

- Synonymous terms and colloquial variations

- Causal and temporal connections between topics

Schema markup has evolved from optional enhancement to absolute necessity, with specialized voice search schemas providing structured data about pronunciations, conversational contexts, and multi-turn dialogue support.

AI Assistants Optimization: Platform-Specific Strategies

Algorithmic Divergence Across Ecosystems

Each major AI assistant platform—Google Assistant, Alexa, Siri, Cortana—employs distinct ranking algorithms with unique optimization requirements. Voice search analytics in 2026 provide platform-specific performance metrics that enable targeted strategy refinement:

Google Assistant prioritizes E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness) with heavy weighting toward structured data and knowledge graph integration.

Alexa favors content from Amazon's proprietary ecosystem and third-party skills, requiring API integration and voice application development for maximum visibility.

Siri emphasizes Apple's curated knowledge sources and business listings, making Apple Maps optimization and Business Connect profiles critical components.

Analytics dashboards now segment performance by platform, revealing which AI assistants drive traffic, conversions, and engagement—enabling resource allocation toward highest-performing channels.

Voice Action Conversion Tracking

The most sophisticated development in voice search analytics is transaction attribution. Advanced tracking now connects voice queries to:

- E-commerce purchases initiated through voice commands

- Local business actions (calls, directions, reservations)

- Content consumption (article readings, video plays)

- App installations and account registrations

This attribution modeling provides definitive ROI metrics for voice optimization investments, transforming it from experimental initiative to core business strategy.

Technical Implementation: Analytics Infrastructure

Real-Time Processing Requirements

Implementing effective voice search analytics requires infrastructure capable of processing streaming data with minimal latency. Technical requirements include:

- Event-driven architecture with millisecond-level timestamp precision

- Natural language processing pipelines for real-time query analysis

- Distributed data warehouses capable of handling petabyte-scale voice datasets

- API integrations with major voice platforms' developer consoles

Leading organizations now employ dedicated voice analytics engineers who specialize in acoustic feature extraction and conversational flow mapping—roles that barely existed two years ago.

Privacy-Compliant Data Collection

Voice data introduces unique privacy considerations. Compliant analytics frameworks implement:

- On-device processing for sensitive query components

- Anonymized voice fingerprinting that prevents personal identification

- Explicit consent mechanisms for recording storage

- Automatic purging protocols for compliance with GDPR, CCPA, and emerging regulations

Balancing analytical depth with privacy protection has become a defining technical challenge for SEO professionals navigating the SEO future 2026.

Strategic Implications: Adapting Your SEO Framework

The integration of voice search analytics necessitates organizational restructuring. Successful teams now include:

- Conversational content strategists who architect question-answer frameworks

- Voice UX designers who optimize for audio-first experiences

- Speech data scientists who extract insights from acoustic features

- Platform-specific optimizers who master individual AI assistant ecosystems

Investment in voice analytics platforms—ranging from enterprise solutions like Adobe Analytics for Voice to specialized tools like Voiceflow and Dashbot—has become non-negotiable for competitive SEO operations.

The competitive advantage lies not in simply collecting voice data, but in developing proprietary analytical models that reveal patterns your competitors miss. Custom machine learning models trained on your specific domain can identify micro-trends in conversational queries before they appear in generalized analytics tools.

Conclusion: Voice-First or Fall Behind

Voice search analytics represents the most significant paradigm shift in SEO since mobile-first indexing. Organizations that treat voice optimization as peripheral rather than central will find themselves increasingly invisible in an AI-mediated search landscape. The technical sophistication required—from NLP integration to platform-specific optimization to privacy-compliant data collection—demands immediate investment and specialized expertise.

The question isn't whether voice will dominate search in 2026; the analytics already confirm it does. The question is whether your SEO strategy reflects this reality. Begin by auditing your current voice search presence, implementing comprehensive analytics tracking, and restructuring content around conversational intent. The SEO future 2026 rewards those who speak their audience's language—literally.

What voice search analytics insights will you implement first? Share your strategy in the comments below.

Comments (0)

Leave a Comment

No comments yet. Be the first to comment!